To electronically transcribe and make handwriting in Europe electronically analysable. These are the functions of the Transkribus platform, one of the initiatives carried out by the READ project, funded by the European Union’s Horizon 2020 Research and Innovation programme.

Transcript of Gramsci Prison Notebooks

A concrete example of how Transkribus works is the transcription of Gramsci’s Prison Notebooks, tested by the director of the Gramsci centre for the Humanities, Massimo Mastrogregori.

Director, how did you come to discover the Transkribus platform and its services?

I discovered the Transkribus platform by doing some research on the Net, while I was looking for a program similar to Ocr (optical character recognition), but usable for the transcription of manuscripts. The initial purpose of this research was to have a computer acquire my manuscript archive, which in recent years has reached a considerable size and has become difficult to manage. But I thought that such a platform could also be used to pursue a less “practical” purpose.

What results have you obtained by applying Transkribus transcription and analysis tools to the Gramsci Prison Notebooks?

The first step was to upload images from some of Gramsci’s notebooks to the platform and use Transkribus for hand transcription. This step is essential, otherwise the software does not learn handwriting recognition: the text of the uploaded image is segmented line by line. Each line of the image-text corresponds to one line of text – transcribed manually.

Once I had reached the required amount of hand-written lines, I alerted the Transkribus team in Innsbruck, who produced a template according to which the platform now recognizes Gramsci’s handwriting, with a very low error rate.

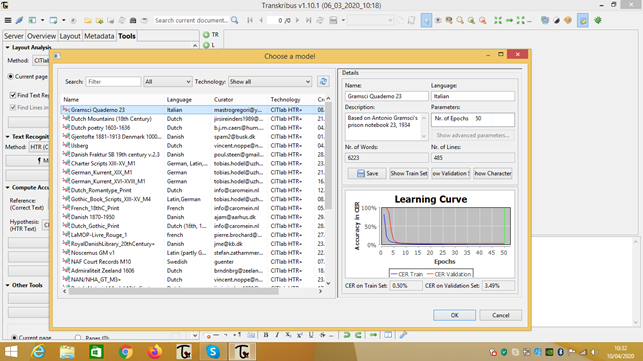

In the chart above you can see the learning curve: it goes down until it becomes flat. At the beginning of the training – which the program does by comparing the data entered and the text images – there is 100% error, at the end about 3%. Thanks to the Gramsci writing template created in Innsbruck, Transkribus is now able to do the transcription itself.

So I got the automatic transcription of Gramsci’s notebooks, the first texts of an Italian author to be processed by the program. Even though the final margin of error was low, it will take a few weeks of work to correct what was processed. The work, of course, does not end here. The research project of the Gramsci centre in San Marino deals with two related issues: how to analyze also “automatically” the Notebooks; how to produce an innovative electronic edition of the Gramscian text, which would not simply be a reproduction of the texts.

The interview with Daniele Fusi, digital philologist

We interviewed Daniele Fusi, researcher in Digital Textual Scholarship at the Venice University Centre for Digital and Public Humanities (VeDPH). The following is the first of three parts of the interview that Dr. Fusi kindly gave us about his experience as a digital philologist; the current frontier of electronic text analysis and the opportunities offered by the Transkribus platform.

Dr. Fusi, as a classicist scholar with a long experience in electronic text analysis, what are the main fields in which you have worked?

Although my training is as a classicist, especially in the fields of classical historical linguistics and metrics, I combine my research activity with that of an IT consultant designing and developing software systems for academic and non-academic institutions and private companies.

What emerges above all from my various activities in this field is a series of increasingly evident convergences towards models and architectures that tend towards a clearer structuring of digital content and its growing need for expansion and interconnection.

For example, experiences and reflections in the field of digital editions in various fields (literary, epigraphic, ancient, modern…) have led to propose new tools to simplify the creation of complex content, which now require the intrinsic nature of many documents or the systematic linguistic analysis carried out by NLP systems. The general idea here requires the dismissal of visions that the tradition of printing has accustomed us to, to embrace a true digital paradigm, modelling data as independently as possible from mental or technological conditioning. One of the tools created to foster this vision is an editing system (Cadmus) that operates in an open and expandable architecture, where data indifferently textual, metatextual or non-textual find a common place, while allowing to generate more traditional outputs such as XML-TEI.

Digital editions are now a real tool, so that systems capable of producing new data can be based on them. For example, I used digital corpora for a metric analysis system (Chiron) open to any language and poetic tradition, obtaining a huge amount of data of great detail and interest. This in turn brings new challenges: when 90 thousand verses analyzed lead to 80 million linguistic and metric data, it is necessary to adopt analysis techniques suitable for this scale, such as Machine Learning, able to highlight linguistic, metric and literary patterns extremely fertile for research, and to test their explanatory models by falsifying their predictions.

Moreover, in turn, such tools have repercussions on the corpora from which they draw the analysis material: the enormous detail of prosodic and metric data produced by the system can in turn return to the corpora in the form of annotation, and properly packaged search systems can take advantage of this, offering new possibilities for querying: hence, for example, a simple DBT system (Pythia) based on a higher level of abstraction and also created to process the data produced by my system.

Alongside resources natively conceived as digital, there is also the problem of recovering and reshaping an enormous heritage of corpora and scholarly work often still entrusted to paper media, or their digitized equivalent. This is a field in which I work for critical editions, dictionaries, corpora, and archival documents, creating conversion and remodeling systems (Proteus) that beyond their practical purpose can teach something about the difference between digitized and digital text.

To be continued…